Projects worked on for Engie Electrabel

(March 2018 - October 2018)In 2018 I worked most of the year as one of the lead python developers at ETS group. This involved working on various parts of their many operational projects mostly targeted at data mining of windmill and hydro energy production in order to optimise revenue from renewables.

Octopus dataminer

Various windmill and data suppliers had their own way of sharing energy production data. Some used nice JSON api's. Some used a plain old csv file. Some had just a flat text file. Some used xml documents. Basically a whole plethora of different ways to exchange data is used.

The octopus project gathers all this data with various worker classes and then stores all the data in a structured PostgreSQL database. From there on the B2B web application Back to Earth is able to show this data and allow editing of contracts with suppliers etc.

Wrote various workers within this existing micro framework using TDD to fetch data of various suppliers.

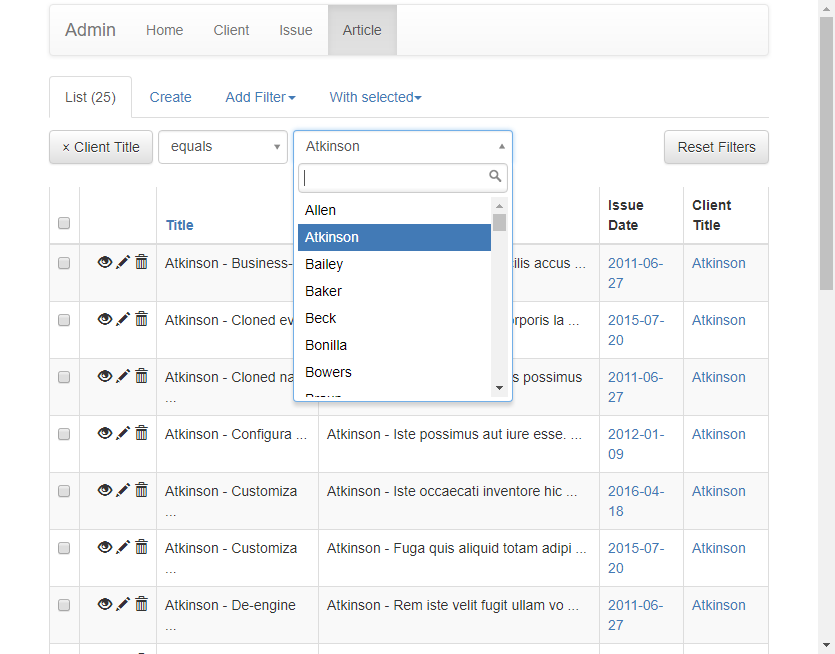

Back to Earth administration pages

Build on flask, flask-admin and a lot of customisation done in blueprints, conveniently called BlueSnake internally developed by the Admian team. Basically they wrapped flask and flask-admin with their own custom engine that would allow for efficient creation of web apps, allowing code reuse of a lot of boiler plate code normally to be rewritten when you start with a python flask app from scratch. IMHO it was nice but documentation did lack a lot and you had to either know things, sift through a lot of well written but sometimes difficult to read code in order to find your way around.

Nowadays in 2020 I highly recommend the Starlette framework in Python: it has the same goals of allowing faster development of new python webapps. And it's faster in performance due to async/reactor pattern (like nodejs). It's also really well documented and easy to start working with. So new people on the team would be able to pick it up more quickly than a custom in-house framework.

Various improvements were made like a nice graphviz integration to get a visual overview of the contracts using cool looking dynamic generated node graphs.

Pipedrive export/import

Smaller project involving around importing and exporting data from a system called pipedrive.

They had an API but it did not expose all data. They wanted to move to a more custom/in house system.

And gave me the assignment to extract all data using some python scripts. In a week or 2 I had a working screen scraping export script that logged in using chrome browser extension to have a proper session and allowed to get all the data they wanted exported into csv files.

Curtailment events

If maintenance happens, or weather conditions are not optimal enough or demand is lower than the supply available with other resources it still happens wind mills have to be shut down (curtailment).

To handle the automatisation of these events and have a general overview of such type of events, a project was started around this to make it more user friendly. The main complexity with this was getting data out of a big database called tourbillion and the events would overlap and had to be normalised.

I first tackled the data processing using some background workers with celery directly in back to earth. But since the b2e platform had grown too much and taken over a lot of functionalities and responsibilities that did not really need to be inside this project it had to be refactored out. This did however involve in making more api calls between octopus and back to earth (way more than doing it directly in back to earth). Imho octopus itself was also burdened with doing to many things and had become a bag of connectors to all external services. Basically if any data needed to be fetched or used somewhere octopus was extended instead of making it a small seperate microservice that was used where needed (a bit analogue to a god class codesmell).

Pandas was used a lot internally. It's a huge python data analysis library with a lot of cool features (highly recommended if you ever need to do datamining). However my knowledge of the pandas framework was sometimes insufficient, so I had to learn a lot around this new framework in order to then actually use it's potential properly. A lot of features I could write from scratch easily enough but using pandas would allow to do it with better runtime performance.

Castor Portal

For the frontend of maintenance events and a calendar view of planned curtailments a Vue.js application was written. This was my first experience/contact with Vue.js but I did have a lot of angular.js and other javascript framework experience that helped me to get up and running pretty quickly.

I first bugfixed various issues here and later extended this application with some pages that would be used to manage the curtailment events. They pushed their data onto the json api linked to back to earth where further background processing would be done. After a few days I really started enjoying working with Vue.js and I would recommend anyone struggling with the different angular versions to have a look at it. By now in 2020 I've moved on to React.js mostly whenever a larger frontend application is needed. Still Vue.js and Angular have their places where they can really shine.

Meteoligica Client

Wrote a small WSDL client for the meteologica API. This is the api various windmill farms expose to report their facilities, lat/longitude and hourly electricity production figures. This was a small package you could import and use in order to fetch electricity production data with SOAP. And it was also used in order to make curtailment and maintenance requests. Ofcourse all written in python.

Day Ahead Client

Another smaller json api client (using basic auth for authorization) for market access day ahead posting of bids and requested customer listings. In essence it would allow you to make bids to buy/sell electricity at certain rates based on predictions of production and power usage throughout the day.

Diem2

A longer running project that was being developed by people with a lot of mathematical skill but suffered from not enough software engineering 'good practices'. It had a huge amount of knowledge about risk aversion, indifference proxy pricing, correlation analysis etc. Basically a lot of statistics, some machine learning and other techniques where implemented here to process the huge amounts of data that was being stored around energy production, selling and buying for the right prices etc. The problem here was that the whole project lacked some coherency and a lot of it worked by reading and writing csv files. And it only worked if you knew what seperate python scripts to call with certain csv input files in order to generate a report or a set of results that you could use elsewhere to yet call another standalone python script for the next steps.

I only had a few spare hours to look at it on a couple of days (in between developing various features and improvements for the above components). But it was quickly pretty clear a lot had to be done to improve the useability and maintainability of this project. It was something that most likely worked great in the hands of the original developer but to re-use it elsewhere was going to take a pretty significant effort. Unfortunately before starting this big refactor and cleanup work on Diem2 my contract had reached its end. Sadly it also wasn't going to be extended as they just hired a lot of new people that were cheaper because they were directly on Admian's payroll. I was not only an external contractor but also my recruitment agency took a significant cut. This all added up and would be just too expensive to keep me on board for another period.

Conclusions

I'm grateful for having the experience working at ETS group. The people were nice and I learned to improve my python pandas and Vue.js skills a lot while working there on their various projects. Their git and atlassian bugtracking integration with branching for each feature was pretty sweet. Deployment and CI/CD was also done well. Code reviews were really tight and pre-commit was used together with tight flake8 rules. My only remark here was during some reviews code was being rejected solely based on differences in flake8 styling preferences causing extra work for both the reviewer and the submitter. Instead this could have been fully automated and even be placed in a pre-commit rule. A simple example of fully automating this, is this autopep script. Now running scripts/autopep fully transforms all my sources to the same formatting fully automatically. This is similar to what has been done for ages in ruby projects with the rubocop -a command that just auto formats and checks all your sources.

My next customer became Meemoo